[Paper reading] Deep Learning for Anomaly Detection: A Survey.

This paper was the first paper posted in Data Science Read Club of our company, since many teams have works relate to anomaly detection, includes classical ML and deep learning, therefore an overview of this area is necessary.

1. Introduction

1.2 What are Anomalies?

Anomalies: instances stand out as being dissimilar to others. Anomalies at sometimes are also called abnormalities, deviants, outliers. Anomalies arise due to multiple reasons: malicious actions, system failures, intentional fraud, etc.

1.3 What are Novelties?

Novelty detection is different from anomaly detection. It is the identification of novel (new) or unobserved patterns in the data, therefore novelties might not be anomalies. One of the examples could be: first time you see a white tiger while you only see normal tigers before, but white tiger is still classified as one type of tiger.

1.4 Different Aspects of Deep Learning

For anomaly detection task, aspects of deep learning could be followings:

- Nature of data

- sequential (time series, text)

- non-sequential (image)

- Based on availabilities of labels

- supervised

- semi-supervised

- unsupervised

Based on training objects

- deep hybrid models: use NN as feature extraction (e.g. AutoEncoders), features learned within the hidden representations of AutoEncoders are input to traditional anomaly detection algorithm.

- one-class NN: extract and learn the representation of data to create a tight envelope around normal data, inspired by algorithms like OC-SVM.

Type of anomaly

- point anomalies: represents an irregularity or deviation.

- contextual anomalies: means conditional anomalies, data is consider as contextual anomalies in some specific context.

- collective (group) anomalies: single data in the group is normal but the group could be considered as anomaly compared with other groups.

- Output of model

- anomaly score: could be normalized as probability

- labels

2. Application of Deep Anomaly Detection

I won’t list too much details here, main areas include:

- Intrusion detection

- Fraud detection

- Malware detection

- Medical anomaly detection

- Social networks

- Log anomaly detection

- IoT big data anomaly detection

- Industrial anomaly detection

- Time series anomaly detection

- Video surveillance

3. Deep Anomaly Detection Models

3.1 Supervised Deep Anomaly Detection

Assumptions: depend on given labels, requires large number of training data.

Pros:

- more accurate than semi-supervised and unsupervised models

- test (predict) stage is fast using trained models

Cons:

- good label is not very easy to get

- fail to separate normal form anomalies if the feature space is highly complex and non-linear

3.2 Semi-supervised Deep Anomaly Detection

Assumptions: models are trained on one class label (normal data), the models learn discriminative boundary around the normal data. Therefore to flag anomalies:

- proximity and continuity: points which are close to each other both in input space and feature space are more like to share same label

- robust features are learned within hidden layers of deep neural network layers and retain the discriminative attributes

Pros:

- models like GAN trained in semi-supervised learning mode have shown great promise even with very few labeled data

- use of labeled data (one-class) can produce considerable performance improvement over unsupervised techniques

Cons:

- the hierarchical features extracted within hidden layers may not be representative of fewer anomalies hence are prone to over-fitting

3.3 Hybrid Deep Anomaly Detection

Assumptions: NN models are used as feature extractors to learn robust features. The hybrid models employ two steps learning:

- robust features are extracted within hidden layers

- build a robust anomaly detection model on features extracted

Pros:

- features extracted greatly reduce the “curse of dimensionality” especially in high dimensional domain

- more scalable and computationally efficient since the linear or non-linear kernel models operate on reduce input dimension

Cons:

- hybrid model is suboptimal because it is unable to influence representational learning within the hidden layers of feature extractor, since generic loss functions are used instead of customized one.

- deeper hybrid models tend to perform better which introduces computational expenditure

3.4 One-Class Neural Networks

Assumptions:

- OC-NN models extracts the common factors of variation within the data distribution within the hidden layers of deep neural network

- performs combined representation learning and produces anomaly score

- anomalies don’t contain common factors hence hidden layers fail to capture the representation of anomalies

Pros:

- OC-NN models jointly trains a deep neural network while optimizing a data-enclosing hypersphere or hyperplane in output space

- OC-NN propose an alternating minimization algorithm for learning the parameters of OC-NN

Cons:

- training time and model update time may be longer for high dimensional data

Here actually I don’t see the main difference with semi-supervised models, since semi-supervised usually trained on one label data.

3.5 Unsupervised Deep Anomaly Detection

Assumptions:

- the normal region in the feature space can be distinguished from anomaly region

- the majority data are normal

Pros:

- learns the inherent data characteristics to separate normal form anomalies

- cost effective techniques to find the anomalies since it doesn’t require labels

Cons:

- often it is difficult to learn commonalities within data in a complex and high dimensional space

- parameters are not easy to tune

- sensitive to noise, usually less accurate than supervised or semi-supervised models

3.6 Miscellaneous Techniques

- Transfer Learning based

- Zero Shot Learning based

- Clustering based

- Deep Reinforcement Learning based

- Statistical Techniques based

4. Commonly used Neural Network Architectures

For more details, check the reference papers listed there.

- Deep Neural Networks

- Spatial Temporal Networks

- Sum-Product Networks

- Word2Vec Models

- Generative Models

- Convolutional Neural Networks

- Sequence Models

- AutoEncoders

5. Summary

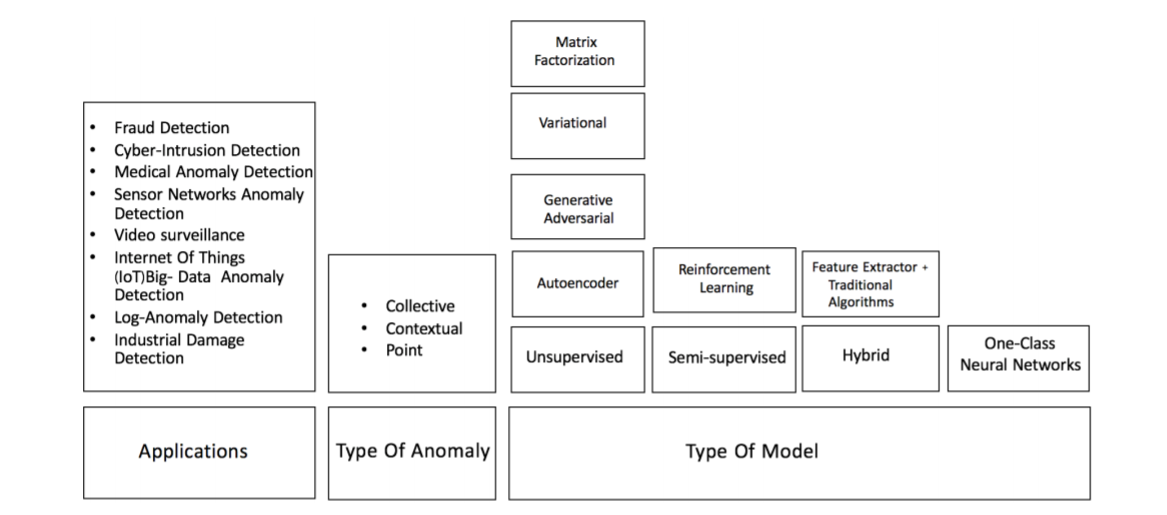

Summarize approaches in one figure(refer from paper):