[Paper reading]: Ad Click Prediction: a View from the Trenches.

Online advertising not only provides multi-billion dollars revenues to companies, but also serves as one of the great success stories for machine learning. This paper from Google provides a overview of ad click prediction with many practical and industrial experiences. It is a “must read” paper for people who work in computational ads area. To be honest, I still don’t quite understand every point in the paper, I’ll update when I get better :)

1. Introduction

The central problem for this multi-billion dollars industry is predicting CTR, sponsored search advertising, contextual advertising, display advertising and real-time bidding auctions, etc, all heavily rely on it. In this paper, the authors present a selection of case studies and topics drawn from recent experiments in the setting of a deployed CTR prediction system as well as some of the challenges that arise in a real-world system, includes

- improvements in the context of traditional supervised learning based on an FTRL-Proximal online learning algorithm and the user of per-coordinate learning rates

- useful tricks for memory saving

- methods for assessing and visualizing performance

- practical methods for providing confidence estimates for predicted probabilities

- calibration methods

- automated feature management methods

2. System Overview

When a user does a search with query \(q\), an initial set of candidate ads is matched to the query based on advertiser-chosen keywords. An auction mechanism then determines (1) whether these ads are shown to the user; (2) what order they are shown in; (3) what prices the advertisers pay if their ad is clicked. In addition to advertiser bids, an important input to the auction is for each ad \(a\), the estimated probability of the ad will be clicked if it is shown: \(P(\text{click}|q, a)\).

The features used in the system include:

- query

- text of the ad creative

- ad-related meta data

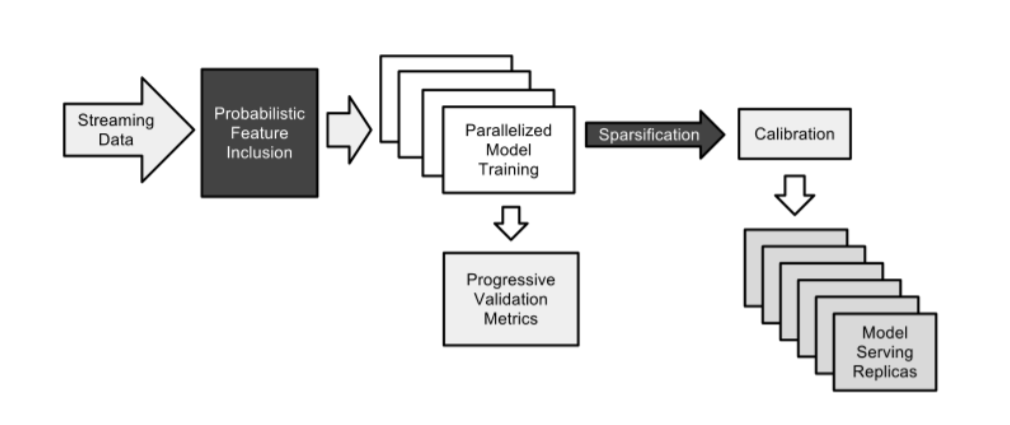

High-level system overview is like this:

3. Online Learning and Sparsity

For learning at massive scale, online learning algorithms for GLM (e.g. logistic regression) have many advantages. It enables efficient training on large datasets by streaming examples from disk or over network, each training example only needs to be consider once.

Denote vector \(\mathbf{g}_{t}\in R^{d}\), where \(t\) is the index of current training example, the \(i\)th entry of \(\mathbf{g}_{t}\) is \(g_{t,i}\), and \(\mathbf{g}_{1:t} = \sum^{t}_{s=1}\mathbf{g}_{s}\).

For logistic regression under online framework, on round \(t\) we need to predict samples described by feature vector \(\mathbf{x}_{t}\in R^{d}\), given model parameter \(\mathbf{w}_{t}\), the prediction is \(p_{t}=\sigma(\mathbf{w}_{t}\cdot \mathbf{x}_{t})\), where \(\sigma(\cdot)\) is the sigmoid function; then given the label \(y_{t}\in\{0,1\}\) and logistic loss function (negative log-likelihood)

\[

\ell_{t}(\mathbf{w}_{t}) = -y_{t}\log p_{t} - (1-y_{t})\log (1-p_{t}),

\]

then the gradient is

\[

\nabla \ell_{t}(\mathbf{w}) = (\sigma(\mathbf{w}\cdot \mathbf{x}_{t}) - y_{t})\mathbf{x}_{t}

\].

In practice, the key consideration is the size of the final model, the number of non-zero coefficients in \(\mathbf{w}\) is the determining factor of the memory usage.

OGD (Online Gradient Descent) is not very efficient for producing sparse models. More sophisticated approaches like FOBOS and truncated gradient do succeed in introducing sparsity. The RDA (Regularized Dual Averaging) produces better accuracy and sparsity trade-off than FOBOS. Then the question is can we get both the sparsity provided by RDA and improved accuracy of OGD? The answer is yes, using FTRL (Follow The Regularized Leader), or FTRL-Proximal. Without regularization, this algorithm is identical to standard oneline gradient descent, but since it uses an alternative lazy representation of the model coefficients, L1 regularization can be implemented much more effectively.

Given a sequence of gradients \(\mathbf{g}_{t} \in R^{d}\), OGD performs the update:

\[

\mathbf{w}_{t+1} = \mathbf{w}_{t} - \eta_{t}\mathbf{g}_{t},

\]

where \(\eta_{t}\) is a non-increasing learning rate schedule, e.g. \(\eta_{t} = 1/\sqrt{t}\). The FTRL-Proximal algorithm instead uses the update

\[

\mathbf{w}_{t+1} = \arg\min_{\mathbf{w}} \left(\mathbf{g}_{1:t}\cdot\mathbf{w} + \frac{1}{2}\sum^{t}_{s=1}\sigma_{s}||\mathbf{w} - \mathbf{w}_{s}||^{2}_{2} + \lambda_{1}||\mathbf{w}||_{1}\right),

\]

where \(\sigma_{s}\) is defined in terms of learning rate schedule such that \(\sigma_{1:t}=1/\eta_{t}\). When \(\lambda_{1} = 0\), it produces identical sequence of coefficient vectors. However, the FTRL-Proximal update with \(\lambda_{1}>0\) does excellent job of inducing sparsity.

Experiments shows that FTRL-Proximal with L1 regularization significantly outperformed both RDA and FOBOS in terms of the size-versus-accuracy trade-offs produced. Overall, FTRL-Proximal gives significantly improved sparsity with the same or better prediction accuracy.

4. Saving Memory at Massive Scale

4.1 Probabilistic Feature Inclusion

Huge sparsity is one of the problems in CTR prediction, the vast majority of features are extremely rare (in some of the model, half the unique features occur only once in the training set in the entrie training set of billions of examples).

It’s very expensive to track statistics for rare feature, and we don’t know in advance which features will be rare. Hashing also didn’t give useful benefits in experiments.

Therefore a new method is proposed called probabilistic feature inclusion, which means new features are included in the model probabilistically as they first occur. This can be implemented in an online setting. There are two methods:

- Poisson Inclusion: for a new feature that is not already in the model, it will be add it to the model with probability \(p\). Once a feature has been added, in subsequent observations we update its coefficient values and related statistics by OGD as per usual. The number of times a feature needs to be seen before it is added to the model follows a geometric distrbution with expectation value \(1/p\).

- Bloom Filter Inclusion: a rolling set of counting Bloom filters is used to detect the first \(n\) times a feature is encountered in training. Once a feature has occurred more than \(n\) times, it is added to the model and use it for training.

Experiments sho that the effect of these methods give good trade-off for RAM savings against loss in predictive quality.

4.2 Subsampling Training Data

Typical CTRs are much lower than 50% therefore clicks are relatively more valuable in learning CTR estimattes. We can reduce the training data size with minimal impact on accuracy. A subsampled training data at query level is created with:

- Any query for which at least one of the ads was clicked;

- A fraction \(r\) of the queries where none of the ads were clicked.

Which means the subsampling is done on negative samples.

Experiments show that even fairly aggressive subsampling of unclicked queries has a very mild impact on accuracy, and that predictive performance is not especially impacted.

Other topics related to saving memory include:

- Encoding Values with Fewer Bits

- Training Many Similar Models

- Single Value Structure

- Computing Learning Rates with Counts

5. Evaluating Model Performance

5.1 Progressive Validation

Instead of cross validation or validation on held out dataset, progressive validation is used. Because computing a gradient for learning requires computing a prediction anyway, we can cheaply stream those predictions out for subsequent analysis, aggregated hourly.

The online loss is a good proxy for accuracy in serving queries, because it measures the performance only on the most recent data before training on it - exactly analogous to what happens when the model serves queries.

Absolute metric values are often misleading. The metrics vary depending on the difficulty of the problem. Therefore relative changes is more important, usually expressed as a percent change in the metric relative to a baseline model. Experiements show that relative changes are much more stable over time, and it is calculated on same time period.

6. Confidence Estimates

Quantifying the expected accuracy of the prediction is important for many CTR applications, such estimates can be used to measure and control explore / exploit trade-offs.

Uncertainty score is proposed heuristically. The essential observation is that the learning algorithm itself maintains a notion of uncertainty in the per-feature counters \(n_{t,i}\) used for learning rate control, features for which \(n_{i}\) is large get a smaller learning rate (we believe current coefficient values are more likely to be accurate). The gradient of logistic loss respect to log odds ratio is \(p_{t} - y_{t}\), its absolute value is bounded by 1. Therefore we can bound the change in log odds ratio prediction due to observing a single training example.

7. Calibrating Predictions

The difference between the average predicted and observed CTR on some slice of data is the system bias. A calibration layer is added to match predicted CTRs to observed CTRs.

The predictions are calibrated on a slice of data \(d\) if on average when we predict \(p\), we can improve the prediction by applying correction function \(\tau_{d}(p)\). A simple way is fit a function \(\tau(p) = \gamma p^{\kappa}\) to the data, \(\gamma\) and \(\kappa\) can be learned by using Poisson regression.

8. Unsuccessful Experiments

Several failed experiments are also listed:

- Aggressive Feature Hashing

- Dropout

- Feature Bagging

- Feature Vector Normalization