[Paper reading]: DeepFM: A Factorization - Machine based Neural Network for CTR Prediction.

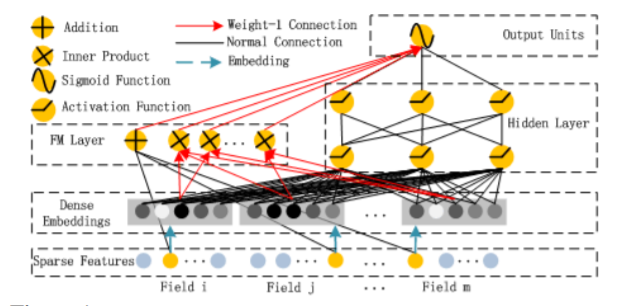

Compared with Google “Wide & Deep Learning” framework which is a combination of logistic regression and deep neural network, DeepFM is a combination of factorization machine and deep neural network, but these two parts share the same inputs, rather than raw features for deep neural network and engineered features for logistics regression in Wide & Deep Learning framework from Google.

1. Introduction

Learning complicated feature interactions behind user behaviors is critical in predicting CTR in recommender systems. For example: people often download apps for food delivery at meal-time, suggesting that 2-way interaction between app category and time in one day; male teenagers like shooting games and RPG games, suggesting that 3-way interaction among app category, user gender and user age. However, there are more feature interactions that are not very intuitive and difficult to identify a priori (class association). Even for easy understandable feature interactions, it would be pretty time consuming for experts to model them exhaustively.

The purpose of DeepFM is to combine the power of FM and deep neural nets, this paper has these main contributions:

DeepFM is a a integration of FM and deep neural network, can be trained end-to-end without any feature engineering.

DeepFM can be trained effectively because its wide part and deep part share the same input and also the embedding vector, with benefits of learning low and high order feature interactions from raw feature and no need for expertise feature engineering.

2. DeepFM

Given the training data \((\mathbf{x}_{i}, y_{i})\), \(i = 1, 2, \cdots, n\), where \(\mathbf{x} = [x_{\text{field 1}}, x_{\text{field 2}}, \cdots, x_{\text{field }m}]\) is an \(m\)-fields & \(d\)-dimension data represents user and item features and \(y\in {0, 1}\) indicates whether user click the item or not. Categorical features are encoded as one-hot vectors.

For feature \(i\), a scaler \(w_{i}\) is used to weigh its order-1 importance, a latent vector \(V_{i}\) is used to weigh its impact of interactions with other features, \(V_{i}\) is fed in FM components to model order-2 feature interactions, and fed in deep component to model high-order feature interactions. All parameters in FM (\(w_{i}\), \(V_{i}\)) and deep neural nets (\(W^{(l)}, b^{(l)}\)) are trained jointly for the model:

\[

\hat{y} = \sigma\left(y_{FM} + y_{DNN}\right),

\]

where \(\hat{y}\) is the final predictions, \(y_{FM}\) and \(y_{DNN}\) are the outputs for FM component and deep component.

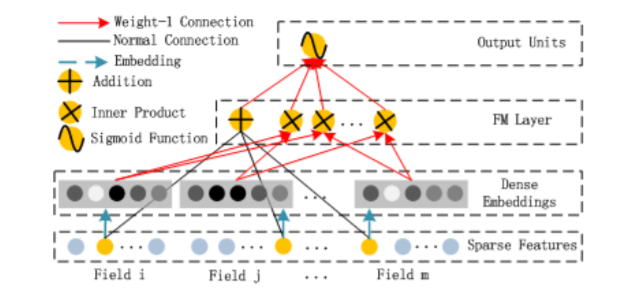

2.1 FM Component

The FM component is Factorization Machine, which could effectively learn 2-way (low-order) feature interactions via latent vectors,

As the figure shows, in the FM layer, there is one Addition unit which the dot product of order-1 features and weights vector, and there are a bunch of Inner Product units which takes two latent vectors (embedding vectors). The output of FM is the summation of an Addition unit and a number of Inner Product units:

\[

y_{FM} = \langle \mathbf{w}, \mathbf{x} \rangle + \sum^{d}_{j_{1}=1}\sum^{d}_{j_{2}=j_{1}+1} \langle V_{i}, V_{j} \rangle x_{j_{1}}x_{j_{2}},

\]

where \(\mathbf{w}\in R^{d}\) and \(V_{i} \in R^{k}\), \(k\) is the length of embedding vectors.

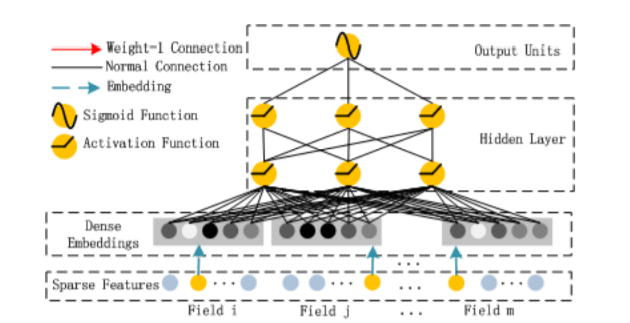

2.2 Deep Component

The deep component is a feed-forward neural network which is used to learn high-order feature interactions.

As the figure shows, an embedding layer is required to compress the input vector (which is sparse) to a low-dimensional dense real-value vector before further feeding into the hidden layers. Latent vectors in FM component is now served as network weights which could be learned.

3. Relationship with other Neural Nets

Existing methods seems to have strong bias towards low or high order interactions, or require expertise feature engineerings.

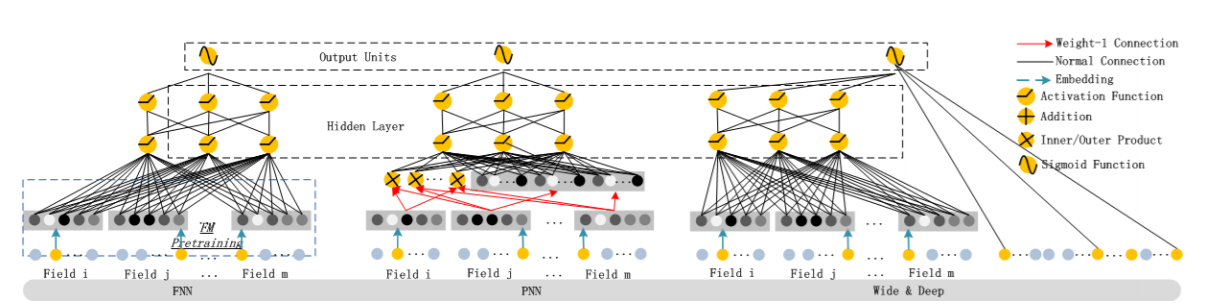

3.1 Factorization-machine supported Neural Network (FNN)

FNN is a FM-initialized feed forward neural network, the FM pretraining strategy results in two limitations:

- the embedding parameters might be over affected by FM

- the efficiency is reduced by the overhead introduced by the pre-training stage

In addition, FNN captures only high-order feature interactions.

3.2 Product-based Neural Network (PNN)

PNN has a product layer between embedding layer and first hidden layer to capture high-order feature interactions and fail to capture low-order feature interactions. The output of the product layer is connected to all hidden units of the first hidden layer, where in DeepFM the output of the product layer is only connects to the final output layer.

3.3 Wide and Deep Learning Framework

There is a need for expertise feature engineering for wide component. A straightforward extension to this model is replace logistic regression by factorization machine. This extension is similar to DeepFM, but DeepFM shares the feature embeddings between FM and deep components.

Therefore, DeepFM is the only model that:

- requires no pretraining

- requires no feature engineering

- captures both low and high order feature interactions